Deep learning models have achieved remarkable results in computer vision and speech recognition in recent years. Within natural language processing, much of the work with deep learning methods has involved learning word vector representations through neural language models.

Today in this blog I will try to summarize a whitepaper written by Yoon Kim (New York University) which shows how they achieved excellent performance on different benchmarks by training a simple CNN with one layer of convolution on top of word vectors obtained from an unsupervised neural language model.

Model

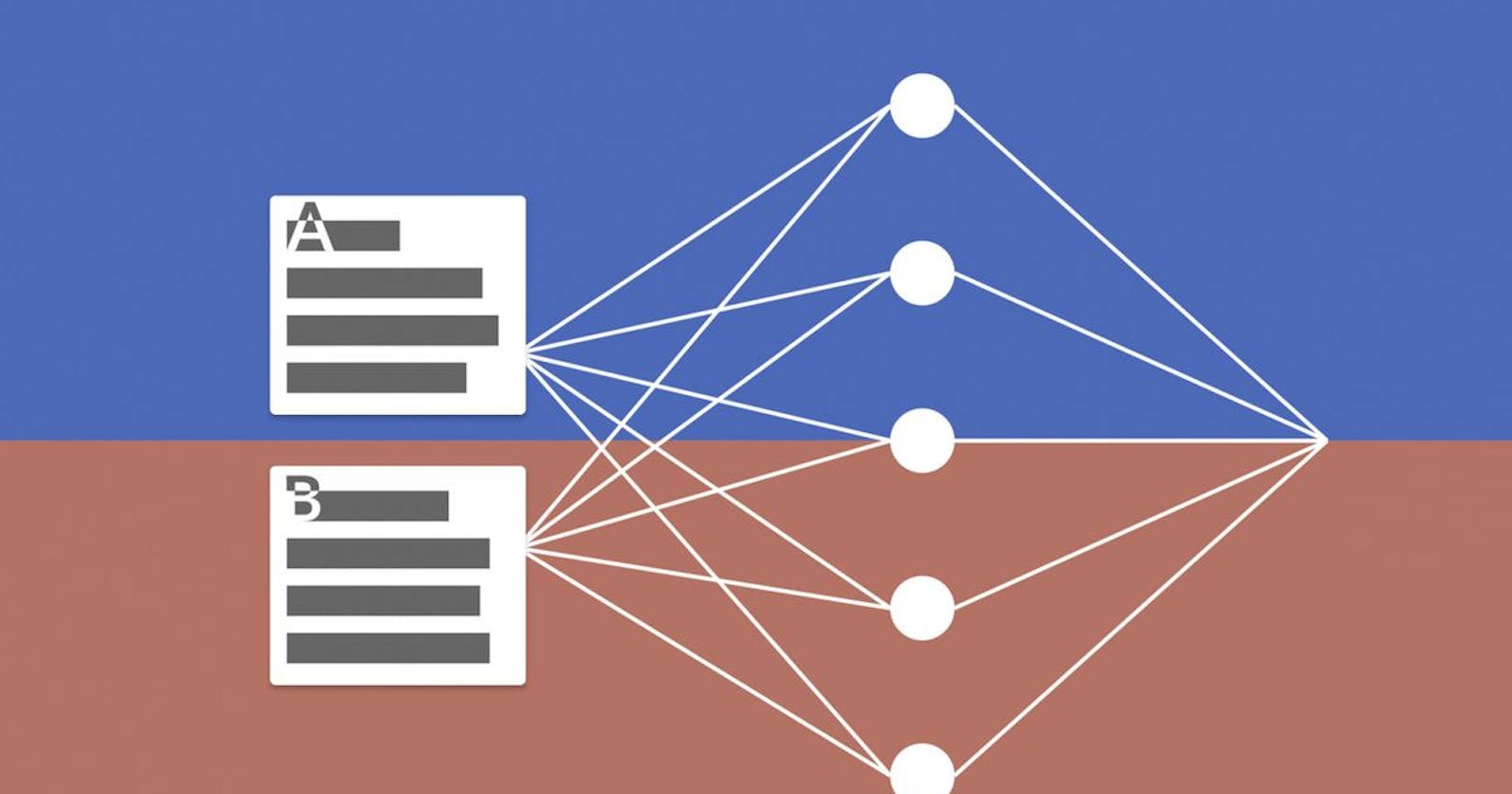

The proposed model architecture shown below is a slight variant of CNN architecture originally presented by Collobert in 2011. Model architecture with two channels for an example sentence.

Letbe the k-dimensional word vector corresponding to the i-th word in the sentence.

A sentence of length n (padded where necessary) is represented as

The word vectors are concatenated. If we represent as the general concatenation of word vectors and W as convolution filter with a convolution window or kernel h, then a feature will be generated by the convolution operation which can be written as below

Here b is the bias and f is a non liner function like hyperbolic tangent. This filter is applied to all possible window of words in the sentence to produce a feature map which can be written as

Then a max pooling operation is applied over the feature map which takes the maximum value as the feature corresponding to the filter. This is the process for extracting one feature from one filter but in real life the model use multiple filters with different kernel sizes to extract various features and all these operations runs in parallel.

Regularization

For regularization, author used the dropout on the penultimate layer with a constraint on l2-norms of the weight vectors. The usual layer output given by

but here we presented it as below due to the dropouts

Where r is a ‘masking’ vector of Bernoulli random variables with probability p of being 1 and it get multiplied(element wise) with layer z.

Datasets and Experimental Setup

Model was tested against various datasets and summary of the Dataset stats given below

Where

- c : Number of target classes

- l : Average sentence length

- N : Datasetsize

- |V| : Vocabulary size

- |Vpre| : Number of words present in the set of pretrained word vectors

- Test : Test set size and if Test set not present the paper uses cv(cross validation)

Hyperparameters & Training

For all datasets model used rectified linear units, filter windows (h) of 3, 4, 5 with 100 feature maps each, dropout rate (p) of 0.5, l2 constraint (s) of 3, and mini-batch size of 50. These values were chosen via a grid search on the SST-2 dev set.

Pre-trainedWord Vectors

Model used the publicly available word2vec vectors that were trained on 100 billion words from Google News. The vectors have dimensionality of 300(i.e k = 300) and were trained using the continuous bag-of-words architecture. Words not present in the set of pre-trained words are initialized randomly.

Model variations

Author deployed various CNN models and you can see their performance comparison with various other models on below table

- CNN-rand: All words are randomly initialized

- CNN-static: A model with pre-trained vectors from word2vec. All words including the unknown ones that are randomly initialized are kept static and only the other parameters of the model are learned.

- CNN-non-static: Same as above but the pretrained vectors are fine-tuned for each task.

- CNN-multichannel: A model with two sets of word vectors. Each set of vectors is treated as a 'channel' and each filter is applied to both channels, but gradients are backpropagated only through one of the channels. Hence the model is able to fine-tune one set of vectors while keeping the other static. Both channels are initialized with word2vec.

Results

If we analyze the table, we can see that compare to models which use randomly initialized word vectors the once with pretrained word vectors performed more efficiently. These results suggest that the pretrained vectors are good, ‘universal’ feature extractors and can be utilized across datasets. Finetuning the pre-trained vectors for each task gives still further improvements (CNN-non-static).

Some further observations are

- Dropout proved to be such a good regularizer that it was fine to use a larger than necessary network and simply let dropout regularize it. Dropout consistently added 2%–4% relative performance.

- Author also experimented with another word vector trained with Wikipedia data but found that word2vec gave far superior result.

- Adadelta gave similar results to Adagrad but required fewer epochs.

Conclusion

The paper experimented that by using a simple CNN with one layer on top of the word vectors generated from pretrained models like word2vec with little hyperparameter tuning we can achieve higher or at least at par results compare to other complicated models available on the field. Also the results add to the well-established evidence that unsupervised pre-training of word vectors is an important ingredient in deep learning for NLP.

You can read the original paper here

Hope you have liked the post. Happy Learning!