Deep learning is a specific subfield of machine learning that puts emphasis on learning successive layers of increasingly meaningful representation of data. The word deep in deep learning is not a reference to any kind of deeper understanding achieved by the approach rather it stands for this idea of successive layers of representations.

Modern deep learning often involves tens or even hundreds of successive layers of representations and they are all learned automatically from exposure to the training data. Meanwhile, other approaches to machine learning tend to focus only one or two layers of representations ,hence they are sometimes called shallow learning.

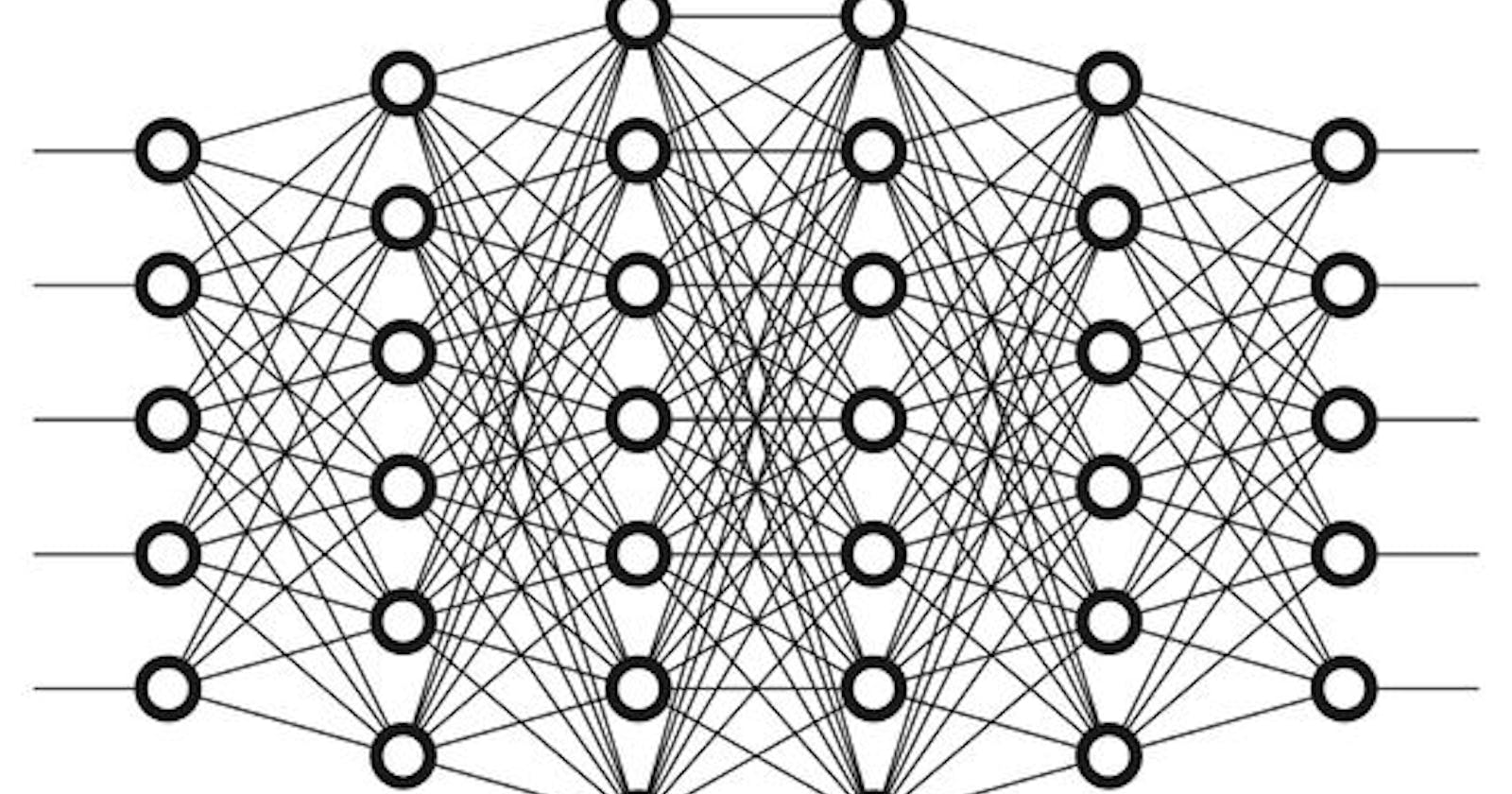

In deep learning, these layered representations are almost always learned via models called neural networks, structured in literal layers stacked on top of each other. The term neural networks is a reference to neurobiology, but although some of the central concepts in deep learning were developed in part by drawing inspiration from our understanding of the brain, deep learning models are not models of the brain. There is no evidence that the brain implements anything like the learning mechanism used in modern deep learning models.

It would be confusing and counter productive for new comers to the field to think of deep learning as being in any way related to neurobiology; you don't need that shroud of "just like our minds" mystique and mystery, and you may as well forget anything you may have read about hypothetical links between deep learning and biology. We should only remember it as a mathematical framework for learning representations from data.

So the deep learning is technically a multistage way to learn data representations. It is the simple idea as it turns out very simple mechanism sufficiently scaled, can end up looking like magic.